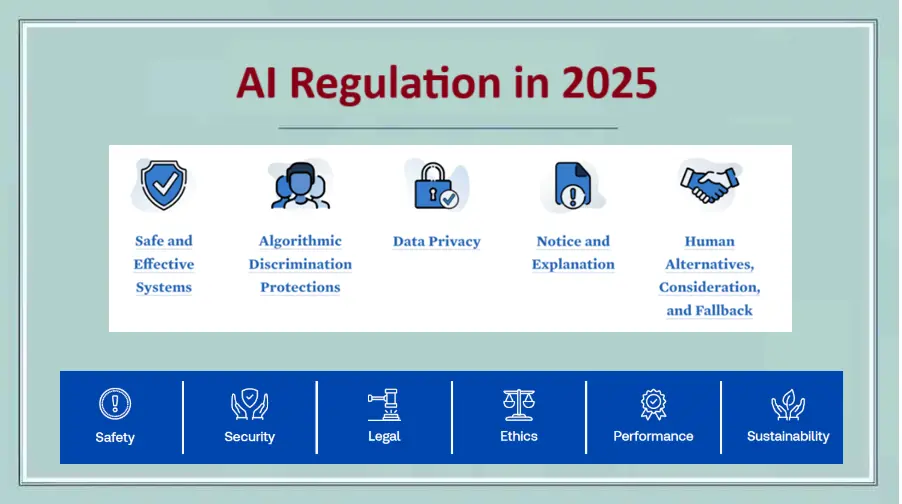

The more AI industrializes, more critical AI Safety Regulation becomes. AI technology is being used across a range of industries from health care and self driving cars to finance and defense. Alongside the advantages, there are also risks to AI. These technologies could cause harm, create biases or even infringe on people’s rights if not properly regulated.

This blog covers the latest news on AI Safety Regulation what governments, researchers and tech companies are doing to make safe and ethical AI for everyone.

Why We Need AI Safety Regulation

AI is changing industries and our daily lives. As AI systems grow smarter, however, they are applied to areas that more directly impact people’s lives even in medical diagnosis, policing and military decisions. But even as it holds the promise of greater efficiency and making progress on big challenges, AI also carries risks.

Some possible risks are that AI will make biased decisions, infringe privacy, or do harm in unintended ways. For instance Autonomous weapons could decide on matters of life and death without human intervention. Or surveillance systems that run on AI might encroach on privacy and personal freedoms.

For these reasons that there’s an increasing demand for AI Safety Regulation. We need rules to provide for the responsible development and use of AI. Without clear rules, A.I. could instead do harm or be unfair to people in certain groups.

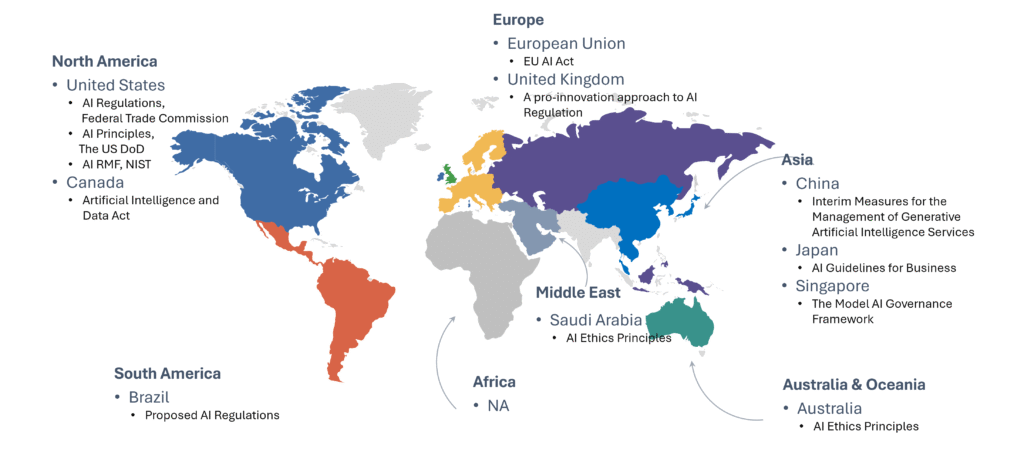

Global Efforts in AI Safety Regulation

The world is filled with many countries and organizations attempting to draft A.I. regulations at the pace of technology. Here are some of the largest Proposals:

European Union’s AI Act

The AI Act has been proposed by the European Council and is very likely to become a central regulation of AI in Europe. This rule is an effort to make sure AI is safe to use. The European Union meanwhile, looks at the risk that an AI system presents. High risk applications of artificial intelligence such as in health care or transportation, would be subject to more stringent requirements. These systems need to be transparent, accountable and tested for safety over time.

Lower risk AI systems, like chatbots or spam filters, will also need to fundamental rules of operation but they won’t be subject to as many constraints. EU wants AI to operate on the basis of peoples rights and not discriminate against anyone.

This is a significant AI safety regulation update and paves the way for responsible AI implementation in Europe.

United States Approach

The U.S. hasn’t yet established a unified AI regulation national law governing A.I. but many government agencies and states are acting. In 2020 the United States Issued the National AI Initiative Act which seeks to accelerate AI research and development as it considers safety risks. The Federal Trade Commission (FTC) too is aiming to shield consumers from AI harm, with a focus on concerns such as data privacy and fairness.

Some states including California have already adopted laws on AI around privacy and how companies may use personal data. Data uses driven by AI, for instance, are protected under California’s Consumer Privacy Act (CCPA), which provides that a consumer’s rights be Secured.

China’s AI Regulations

Among the world’s major economies, China is at the forefront of AI. In 2017 it released the New Generation Artificial Intelligence Development Plan and in that plan are some guidelines for regulating A.I. In 2021 China published new AI ethics guidelines a bid to promote responsible use of the technology. These guidelines are aimed at ensuring AI is transparent, accountable and does not Favor. They also hope to ensure AI contributes to economic growth, while keeping society stable.

International Collaboration

Because A.I. is a global technology, countries must cooperate in establishing regulation that can be implemented consistently worldwide. One such attempt is contained in the Organization for Economic Co-operation and Development Principles on Artificial Intelligence, which have been acknowledged by 42 countries. The principles are designed to help guarantee AI is developed in a way that serves everyone and doesn’t harm.

The 15 country Global Partnership on AI (GPAI) also focuses on establishing international standards for AI. Their aim is to ensure that AI is deployed ethically, and its risks taken into account.

Challenges in AI Safety Regulation

There’s a rising tide of support for AI Safety Regulation Update but making effective laws and guidelines isn’t simple. These are some of the most pressing challenges.

The Rapid Pace of AI Technology

AI is evolving at a clip that sometimes has outpaced the ability of governments to regulate it. New legal guidelines could be outdated by the time they are enacted, given the fast pace at which AI is developing. That has made it difficult for regulators to avoid growing obsolete whenever they create a rule.

Global Differences in Regulation

AI is a global technology, but rules governing it and discussions of ethics around using it vary from country to country. For instance, how China tackles AI is radically different from E.U. or the U.S. That can be challenging for companies that do business in multiple countries to keep up with different rules and regulations.

Ethical and Social Concerns

AI has so many implications for society and making decisions about what is ethical can be challenging. For instance should AI systems be deployed to follow what people are doing? How can we keep AI from making a biased choice? The problem is that what’s right and wrong isn’t the same everywhere what one culture considers morally justified another will not.

Accountability and Enforcement

Regulations exist but are difficult to enforce. AI systems are frequently “black boxes” that defy explanation which makes it difficult to assign blame when things go wrong. Clear accountability, and enforcement mechanisms, are essential to develop trust around AI.

What’s Next for AI Safety Regulation

AI Safety Regulation will develop as AI’s expansion proceeds. More countries will probably enact regulations, and those on the books now will evolve to address new advances in AI. Governments, companies and international organizations will all need to work together to establish rules that keep A.I. safe and fair for everyone else.

Developers of AI will also have to be more mindful in the design of systems that use AI. They need to consider safety and ethics at the beginning of development, not as an afterthought.

Over time, we will likely see more stringent regulation, better enforcement and greater transparency about how AI systems operate. This will help to ensure that AI remains a force for the good of society with minimal risk.

Conclusion

Ai Safety Regulation News is of the utmost importance as AI grows in prominence and influence within our world. Governments and organizations throughout the world are working Restlessly to establish rules around which AI can be developed safely and used responsibly. But much remains to be done, including keeping up with the rapid development of a.i. and striking common ground across countries.

With good regulation, it is encouraging that AI can be safe and will be an aid to solving significant worldwide problems rather than create them. But it’s crucial we put safety, fairness, and accountability first in any technology hand that we are dealt to ensure AI is something for us all. Similarly, by focusing on ethical AI practices, businesses can rank higher on Google and use technology responsibly to improve their online presence.